How to Remediate Amazon RDS Idle Instances with Stratusphere™ FinOps

Learn to reduce AWS costs with Stratusphere™ FinOps by efficiently remediating idle Amazon RDS instances, plus strategies to optimize your RDS...

Master AWS DynamoDB cost optimization with strategies for reducing cloud spend, leveraging automation tools, & maximizing resource efficiency.

Amazon DynamoDB is a managed service that enables you to provision semi-structured NOSQL document storage for your applications. In DynamoDB, you create tables with a specified partition (ie. product_category) and optional sort (ie. product_name).

Once the table is created, your application can store individual items in the table, with a series of attributes, aka. Key-value pairs. Each item must have attributes representing the partition key and sort key, if applicable.

However, items can specify additional attributes above and beyond the partition and sort key attributes (ie. price, description, etc.).

With DynamoDB, you are billed for the amount of storage you’re using in your tables, along with the capacity to read and write items into your tables. You’re also billed for other capabilities, such as exporting and importing data from DynamoDB, backup & restore operations, caching, data egress, and stream capture.

In order to cost optimize DynamoDB tables, you’ll need to start by obtaining an inventory of the tables themselves. Like many other AWS services, Amazon DynamoDB is a “regional” service. Each table you create is created in a specific AWS region, with your data replicated across three facilities within that region.

DynamoDB also supports a feature called Global Tables. Despite the name, this is still a regionally created table, which sets up replicas to other regions.

You can retrieve a list of your DynamoDB tables using automation tools like the AWS CLI or AWS PowerShell modules.

If you want to use PowerShell to query for DynamoDB tables, you can use the commands below. You’ll need to install the module for DynamoDB first, to get access to the commands for the service.

Next, you’ll set your access key and secret key, if you’re using static credentials for an IAM User. There’s a separate DynamoDB API and PowerShell command to list the table names, and then actually retrieve the detailed configuration for each table.

Install-Module -Name AWS.Tools.DynamoDBv2 -Scope CurrentUser -Force

Set-AWSCredential -AccessKey YOUR_ACCESS_KEY -SecretKey YOUR_SECRET_KEY

$TableList = Get-DDBTableList

foreach ($Table in $TableList) {

Get-DDBTable -TableName $Table

}

You can explore the User Guide and Command Reference for the AWS PowerShell modules, to learn more about the commands available for Amazon DynamoDB.

There’s a variety of ways to approach cost optimization for Amazon DynamoDB.

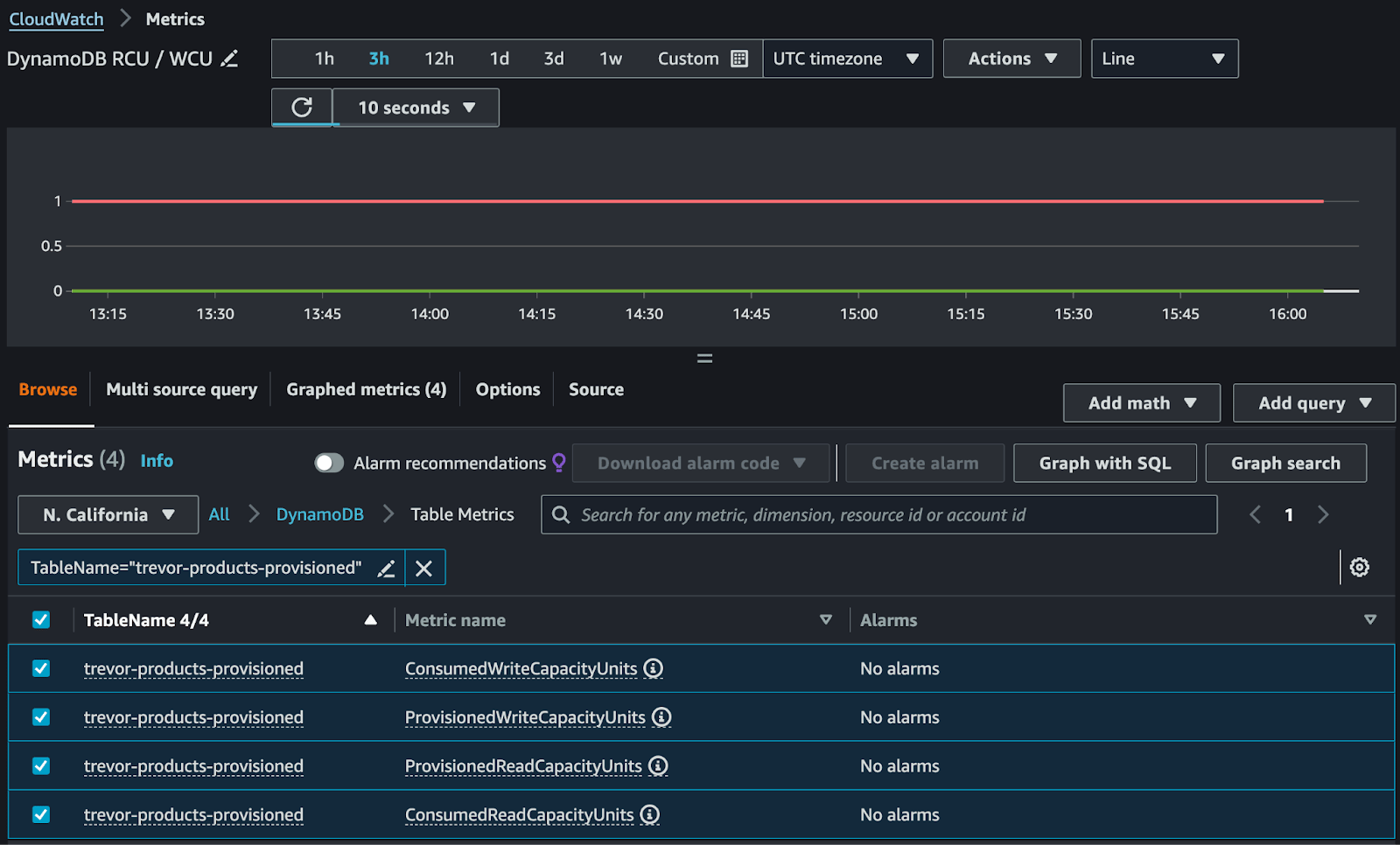

One of the easiest ways to reduce cloud spend on Amazon DynamoDB tables is to reduce the number of pre-provisioned Read & Write Capacity Units (RCU / WCU) on the table. This only applies to tables that are using the Provisioned capacity mode (pricing model).

For example, let’s say that you provision 500 RCU and 300 WCU for a DynamoDB table, but during development you find that you’re peaking at 100 RCU and 50 WCU. The delta between the provisioned capacity and what’s actually consumed represents your potential cost savings.

To find out if your DynamoDB tables are under-utilized, you can query the built-in CloudWatch metrics that are emitted from the DynamoDB service.

If your table is configured with the On-Demand (aka. Pay-per-Request) capacity mode, then you simply pay for the RCU & WCU that are actually consumed. There is no pre-provisioned capacity. The downside to using On-Demand capacity mode is that the cost can be significantly higher, especially for workloads that use a large amount of RCU / WCU.

Amazon DynamoDB supports integration with the Application Auto Scaling (AAS) service. This allows you to configure a scaling policy that adjusts the provisioned read & write capacity for your DynamoDB tables and Global Secondary Indexes (GSI).

Although the AWS Management Console exposes auto scaling as a feature of DynamoDB, it is actually part of the separate AAS service. Hence, any API calls that deal with configuring auto scaling policies will be sent to the AAS APIs, rather than DynamoDB.

Enabling auto-scaling for DynamoDB tables helps to remove the guesswork from setting your read/write capacity units appropriately for your workload.

To find out if a DynamoDB table has auto-scaling enabled, you can use the DescribeScalingPolicies API for the Application Auto Scaling service. The ServiceNamespace request parameter should be set to “dynamodb” to return the scaling policies related to DynamoDB tables and GSIs.

In order to retrieve scaling policies for your DynamoDB tables and GSIs, you can use the following PowerShell commands.

Install-Module -Name AWS.Tools.ApplicationAutoScaling -Scope CurrentUser -Force

Get-AASScalingPolicy -Region us-west-1 -ServiceNamespace dynamodb

This command will retrieve all of the auto-scaling policies for all tables and GSIs, in the specified AWS region. You will need to examine the ResourceId field of each result to determine which table or GSI the scaling policy is bound to. If you don’t see any results for a specific DynamoDB table or GSI, then auto-scaling is most likely not enabled for that resource.

In Amazon DynamoDB, there are two types of indexes: Global and Local Secondary Indexes (LSI / GSI). Each of these has pros and cons, which you can learn more about in our LSI video and GSI video. Regardless of which type of index you create, the items stored in the index are based on the items in the underlying “base table.”

An index is directly associated with a specific DynamoDB table, and cannot exist as an independent entity.

When you create an index on a DynamoDB, it consumes additional storage that you’re charged for. If business applications are not making queries against an index, it should be safe to delete the index, reducing the total storage you’re paying for.

If you provision a GSI, they have separate RCU / WCU provisioning settings from the base table. An LSI will use the provisioned capacity of the base table.

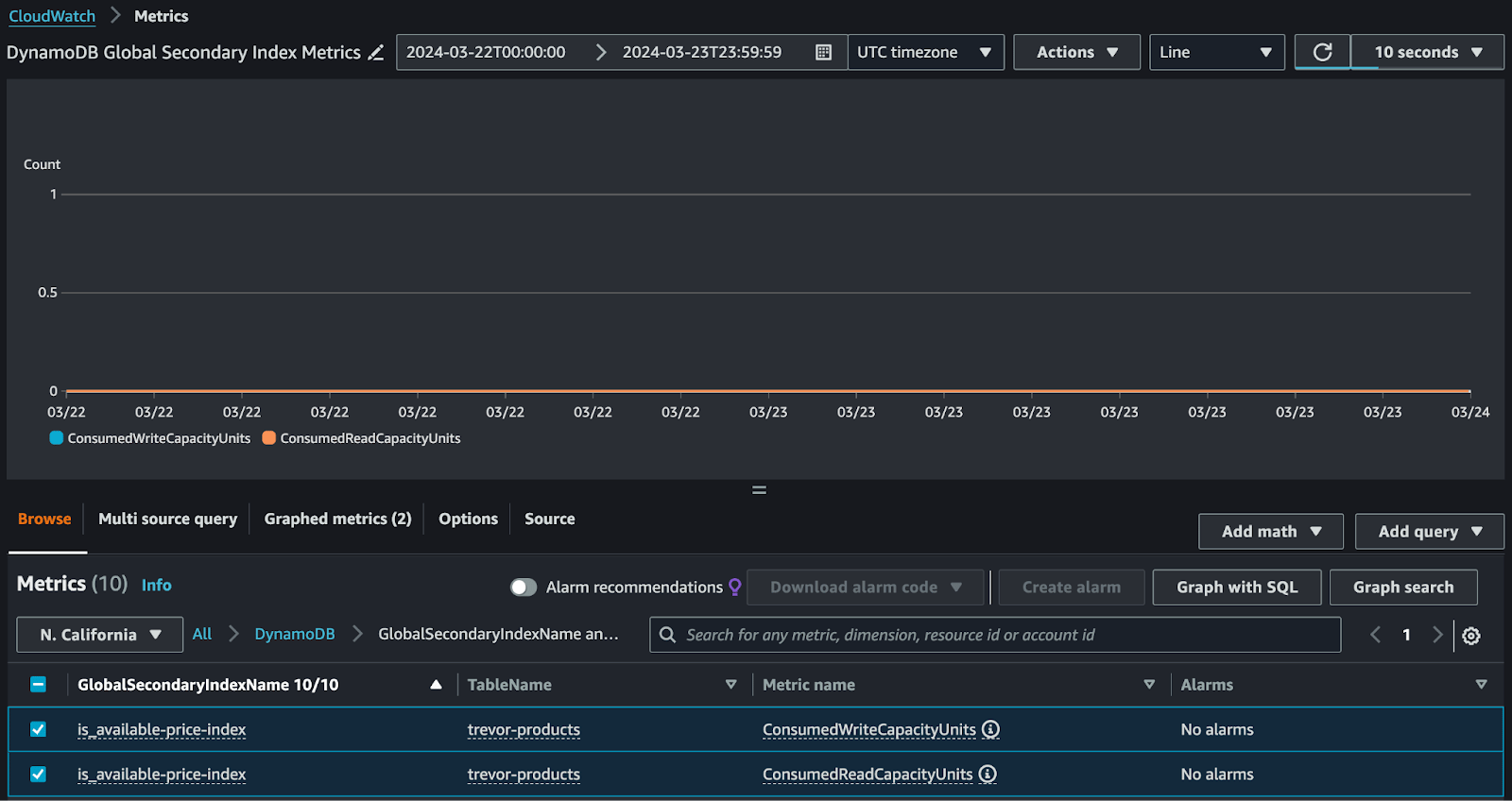

You can use CloudWatch to monitor metrics for indexes, to determine if they’re being used or not. If you explore the DynamoDB namespace in CloudWatch Metrics, there is a sub-category called GlobalSecondaryIndexName and Table Metrics.

Under there, you can find metrics related to a specific GSI. The screenshot below shows the metrics indicating the RCUs / WCUs that are actually consumed from a GSI. For the selected time period, you can see that there have been zero read/write units used, indicating that this GSI is completely idle, and can likely be removed.

NOTE: LSIs do not have their own CloudWatch metrics, unlike GSIs, because they rely on the base table’s provisioned capacity.

You can periodically take on-demand backups of your Amazon DynamoDB tables. These backups will exist indefinitely unless you delete them, or you implement a backup policy in AWS Backup. On-demand backups are priced per GB-month and are available at two different tiers: warm backups ($0.10 per GB-month) and cold backups ($0.03 per GB-month).

Warning: Deleting backups of DynamoDB is a permanent operation and cannot be undone.

There’s a couple different ways of backing up DynamoDB tables. First, you can use the built-in backup functionality, and secondly you can use the integration with the AWS Backup service. You can see these two options if you attempt to create a new on-demand backup from the DynamoDB console.

If you navigate to the DynamoDB console, and select one of your tables, you should see a tab called Backups. There is a setting to enable or disable Point-in-time-Recovery (PITR), and beneath that there’s a list of the backups that you’ve created for your table.

You can use the ListBackups API for DynamoDB to list backups for all tables, or a specific table, within a given AWS Region. To delete a specific backup, you can call the DeleteBackup API, and pass in the Amazon Resource Name (ARN) of the backup that you want to delete.

To use PowerShell to list the backups in the DynamoDB service, across all tables within a given AWS Region, you can use the following commands.

Install-Module -Name AWS.Tools.DynamoDBv2 -Scope CurrentUser -Force

Get-DDBBackupList -Region us-west-1

To delete a specific backup, you can use the following command. Store the Amazon Resource Name (ARN) of the backup in a variable, and then call the Remove-DDBBackup command.

$Arn = 'arn:aws:dynamodb:us-west-1:973081273628:table/trevor-products/backup/01712764289962-4338f529'

Remove-DDBBackup -BackupArn $Arn -Region us-west-1 -Force

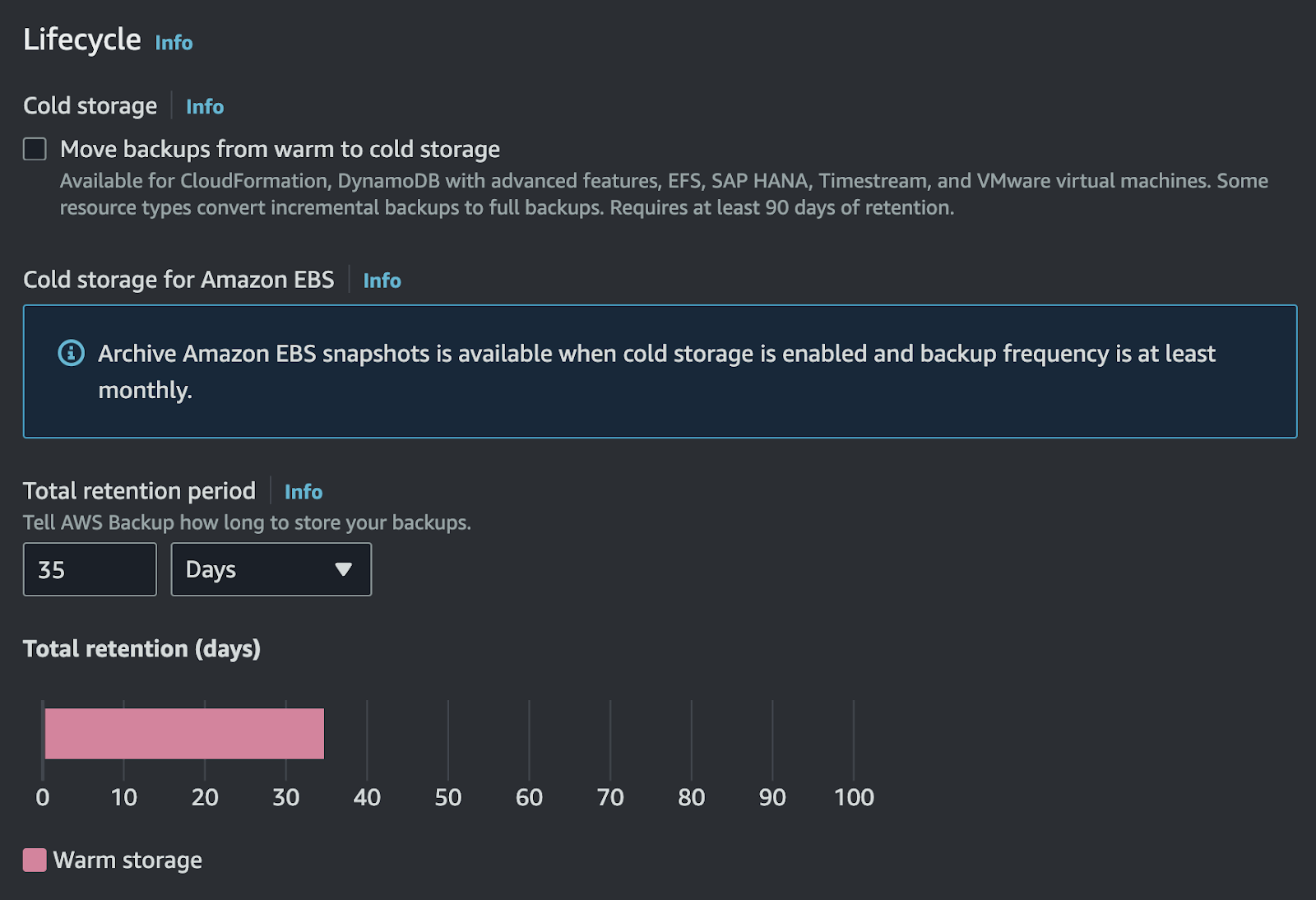

The AWS Backup service is separate from DynamoDB, but supports backing up DynamoDB tables. AWS Backup can also create backups for other AWS data storage resources, such as EBS volumes, RDS instances, S3 objects, and many others.

If you configure a Backup Plan in AWS Backup, you can specify a Lifecycle retention period that will automatically delete older backups.

This provides a simple mechanism to rotate backups without needing to manually manage aging backups in the DynamoDB service directly.

Stratusphere™ FinOps is a Software-as-a-Service (SaaS) solution developed and operated by StratusGrid, which helps you to identify cost savings opportunities across your entire organization.

Stratusphere™ FinOps performs calculations based upon a variety of data sources, including Cost and Usage Reporting (CUR) data, and flags potential cost reductions as “findings.”

You can take action on these findings to reduce your cloud spend, with minimal or no impact to your line of business applications.

Unlock the full potential of your cloud operations with Stratusphere™ FinOps.

With Stratusphere™ FinOps, you gain access to powerful insights that help reduce your spending without impacting performance. Start your free trial now and experience firsthand how Stratusphere™ FinOps can transform your organization’s efficiency and cost-effectiveness.

Contact us for more information.

BONUS: Download Your FinOps Guide to Effective Cloud Cost Optimization Here ⤵️

Learn to reduce AWS costs with Stratusphere™ FinOps by efficiently remediating idle Amazon RDS instances, plus strategies to optimize your RDS...

This knowledge base article for Stratusphere describes how to identify and remediate inactive AWS NAT Gateway cloud resources in your AWS environment.

Discover AWS DocumentDB: a MongoDB-compatible, AWS-managed database service. Learn how to optimize costs using Stratusphere™ by StratusGrid..